Bio

I recently received my PhD in AI from Seoul National University, advised by Prof. Byoung-Tak Zhang. My research lies at the intersection of robot learning and multimodal AI, where I focus on building generalist robots by leveraging semantic priors from large pretrained models. Specific topics include:

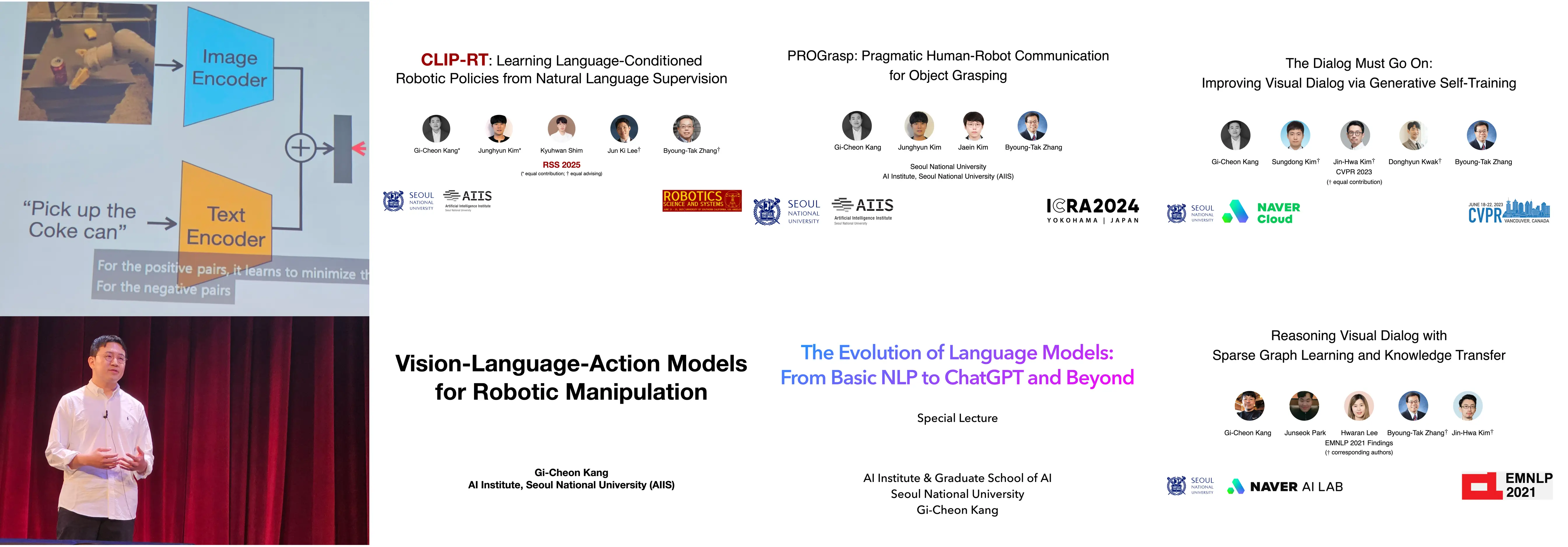

- Robotics Foundation Models: Building vision-language-action (VLA) models for generalist robot policies, with a focus on grounding web-scale knowledge to robotic control (CLIP-RT).

- Embodied Reasoning: Investigating vision-language models (VLMs) for task planning (Socratic Planner) and reasoning about language instructions (PROGrasp, PGA, GVCCI).

- Vision & Language: Developing interactive VLMs that engage in continuous, grounded communication with humans about images (GST, SGL, DAN) and videos (MASN).

My PhD research has been supported by fellowships from Youlchon Foundation and IPAI. I was fortunate to collaborate with researchers in NAVER AI and SK T-Brain.

Prior to joining PhD, I did my master study in Cognitive Science at Seoul National University. Studying cognitive science has sparked my interest in AI and interdisciplinary research. I earned my Bachelor’s degree in Computer Science from Ajou University.

News

- [Nov 2025] I gave a talk at Dept. of Immersive Media Engineering at Sungkyunkwan University (SKKU).

- [Oct 2025] I gave a talk at UNIST graduate school of AI.

- [July 2025] Our vision-language navigation work is accepted to BMVC 2025.

- [April 2025] Happy to announce that our work (CLIP-RT) is accepted to RSS 2025!

- [April 2025] I’m selected as a member of RSS Pioneers!

- [Nov 2024] Happy to release a new robotics foundation model, CLIP-RT!

- [Aug 2024] I’m selected as a recipient of the Youlchon AI Star Fellowship.

- [Jun 2024] PGA is accepted at IROS 2024.

- [Apr 2024] A preprint for embodied instruction following (Socratic Planner) is released.

- [Mar 2024] I wrote my research statement about what I’ve been studying.

- [Mar 2024] A new preprint (Continual Vision-and-Language Navigation) is released.

- [Jan 2024] PROGrasp is accepted to ICRA 2024!

- [Dec 2023] I attend Brainlink 2023.

- [Nov 2023] I’ll give a talk at Dept. of Energy Resources Engineering at Seoul National University (Title: “The Evolution of Language Models: From Basic NLP to ChatGPT and Beyond”).

- [Oct 2023] Two preprints (PROGrasp and PGA) are released!

- [Jun 2023] One paper is accepted to IROS 2023!

- [Mar 2023] Happy to announce that our paper is accepted to CVPR 2023!

- [Jun 2022] One paper is accepted to ICML 2022 Pre-training Workshop.

- [May 2022] Thrilled to announce that our new preprint is released!

- [Apr 2022] One paper is accepted to CVPR 2022 HCIS Workshop.

- [Dec 2021] I gave an invited talk at Korea Software Congress.

- [Oct 2021] One paper is accepted to NeurIPS 2021 CtrlGen Workshop.

- [Aug 2021] One paper is accepted to Findings of EMNLP 2021.

- [May 2021] One paper is accepted to ACL 2021.

- [Sep 2020] I’m starting my Ph.D. in this fall.

- [Jun 2020] From July, I’ll join SNU AI Institute (AIIS) as a researcher.

- [Jan 2020] Our paper has been accepted to ICASSP 2020!

- [Dec 2019] From January, I’ll be a research intern at SK T-Brain!

- [Nov 2019] I gave a spotlight talk at Video Turing Test workshop, ICCV 2019.

- [Oct 2019] I gave an invited talk at SK Telecom AI Center.

- [Aug 2019] Excited to announce that our paper has been accepted to EMNLP 2019.

- [Jun 2019] Our proposed method ranks 3rd place in Visual Dialog Challenge 2019!!

- [Aug 2018] We have a paper accepted to ECCV 2018 Workshop on VizWiz Grand Challenge.

Highlights (Here are all papers)

CLIP-RT: Learning Language-Conditioned Robotic Policies from Natural Language Supervision

RSS 2025

CoRL 2024 Workshop on Language and Robot Learning

Project Page

Paper

Code

PROGrasp: Pragmatic Human-Robot Communication for Object Grasping

The Dialog Must Go On: Improving Visual Dialog via Generative Self-Training

CVPR 2023

ICML 2022 Workshop on Pre-training: Perspectives, Pitfalls, and Paths Forward

Project Page

Paper

Code

Slides

Video

Invited Talks

Vision-Language-Action Models for General-Purpose Robot Manipulation (Link)

Dept. of Immersive Media Engineering, Sungkyunkwan University (SKKU), Nov. 2025

Graduate School of AI, UNIST, Oct. 2025

CLIP-RT: Learning Language-Conditioned Robotic Policies from Natural Language Supervision

Robotics: Science and Systems (RSS), June 2025

Foundation Models for Robotics: Learning and Interaction

Robotics: Science and Systems (RSS) Pioneers Workshop, June 2025

Vision-Language-Action Models for Robotic Manipulation

Korea Robotics Society (KROS) Workshop on Mobile Manipulation, May 2025

PROGrasp: Pragmatic Human-Robot Communication for Object Grasping

IEEE International Conference on Robotics and Automation (ICRA), May 2024

The Evolution of Language Models: From Basic NLP to ChatGPT and Beyond

Dept. of Energy Resources Engineering, Seoul National University, Nov. 2023

The Dialog Must Go On: Improving Visual Dialog via Generative Self-Training

IEEE RO-MAN Workshop on Learning by Asking for Intelligent Robots and Agents, Aug. 2023

Reasoning Visual Dialog with Sparse Graph Learning and Knowledge Transfer

KSC 2021 - Top-tier Conference Paper Presentation Session, Dec. 2021

Annual Conference on Human and Cognitive Language Technology, Oct. 2021

Dual Attention Networks for Visual Reference Resolution in Visual Dialog

ICCV 2019 - Video Turing Test Workshop (Spotlight Talk), Nov. 2019

SK Telecom AI Center, Sep. 2019

Services

🟥 = ML or AI / 🟦 = Robotics / 🟩 = NLP / 🟨 = Workshops

|

|